Game Over

Softimage joins the Spy Kids on a most challenging mission

softimage.com from 2003

Game Over

Softimage joins the Spy Kids on a most challenging mission

softimage.com from 2003

Softimage::Community::Customer Profile

![]()

If you have ever dreamed of having your own robot, read what Japan’s Digital Frontier did with SOFTIMAGE®|XSI® for the Armored Core series on PlayStation 2. Digital Frontier used the 3ds max converter to have access to the best rendering in the business. You will be amazed by the results.

I don’t know about you, but I love robots. In everything from James Cameron’s original The Terminator to Ted Hughes’ timeless The Iron Man (his 1968 children’s book was further immortalized thirty-one years later in the animated film The Iron Giant) to those dealies that put our cars together, the man-made mechs have been models of ingenuity, resilience and unbridled power. In the video game world, of course, there is something vicariously satisfying, yet guilt-free, about attacking something that—unlike our Humpty-Dumpty selves—can ostensibly be put back together again.![]()

The thrill of such mechanized combat reaches its apogee in From Software’s Armored Core series, which first appeared in 1996 and achieved its third definitive version in April 2002. As part of the roll-out for the highly-anticipated PlayStation 2 experience, production company Digital Frontier made extensive use of SOFTIMAGE|XSI to create a highly effective and affective television commercial. But, let’s return to Armored Core for a second.

Simply put, Armored Core lets you kick some serious ass as a so-called Raven mercenary, a mech-for-hire whose sole goal is to keep your vehicle in top shape. Each mission earns money for car repairs, but also makes those repairs necessary. Luckily, you have checked your altruism at the door and are only intent on pulling in the cash. With a growing arsenal of weaponry, your chosen line of work is never boring. With an almost obsessive attention to mechanical detail, stunning graphics and arresting live action, Armored Core takes the fun of action very, very seriously.

Founded in 1994 and now boasting a staff of sixty, Digital Frontier handles a wide variey of television commercials and programs, feature films, games and plethora of other projects from their Tokyo offices. Digital Frontier’s Yasuhiro Ohtuka was the CG director on the Armored Core commercial. As a former software support specialist, Ohtuka knew he and his team had their work cut out for them, and knew the benefits that SOFTIMAGE|XSI could provide. One problem, however: much of their data was still in one of those “other” software systems:

“We used SOFTIMAGE|XSI straight through the CG production process for pre-visualization, modeling, animation, and rendering,” says Ohtuka matter-of-factly. “We also made great use of Avid|DS for final compositing, but that’s another story. We were happy to use SOFTIMAGE|XSI, but a bit concerned about the transition out of the other software package. As it turned out, however, there was no need for worry: we were able to move all of our data into SOFTIMAGE|XSI with the latest version of 3ds max converter. We then grouped all the elements in SOFTIMAGE|XSI and did some lump-sum editing. That way, we had great control over the feel of the material.”

Despite his initial concerns, Ohtuka was nevertheless intent on using SOFTIMAGE|XSI on the project. The reason? Two words: Final Gathering!![]()

“Simply put, we wanted to render our mechs with the advantages of SOFTIMAGE|XSI’s Final Gathering,” Ohtuka explains. “SOFTIMAGE|XSI’s rendering quality is extremely high. Using the Render Tree together with Final Gathering proved to be more than even expected. We could pursue the possibility of expressions through the Render Tree then, with Final Gathering, we were able to create an extremely high quality image. The functionality of Final Gathering as a pure tool integrated within SOFTIMAGE|XSI is just part of what makes it a supremely powerful tool, however. Functions like Dynamics, Hair, Fur, Particles, Function Curves, UV editing of textures, Subdivision Surfaces and the Animation Mixer add extra advantages to what is simply a great system.”

Ohtuka’s praise for SOFTIMAGE|XSI doesn’t stop with the present system. Making specific reference to SOFTIMAGE|XSI’s productivity advances, Ohtuka sees great things coming in the future,

“XSI’s high-leveled modeling, animation and editing tools like the Animation Mixer and Script Function, which enable us to add functionality, exchange data through our networked environment, as well as use Net View to actively lead us to the ever-higher levels of productivity. ”

When it gets right down to it, haven’t you always wanted a robot? An uncomplaining, ever-ready mech prepared to get you the paper, turn on the TV, mix you a drink, fix you a snack and threaten your neighbors? OK, maybe it’s just me, but Digital Frontier has made those childhood dreams come true. For that, they have our thanks.

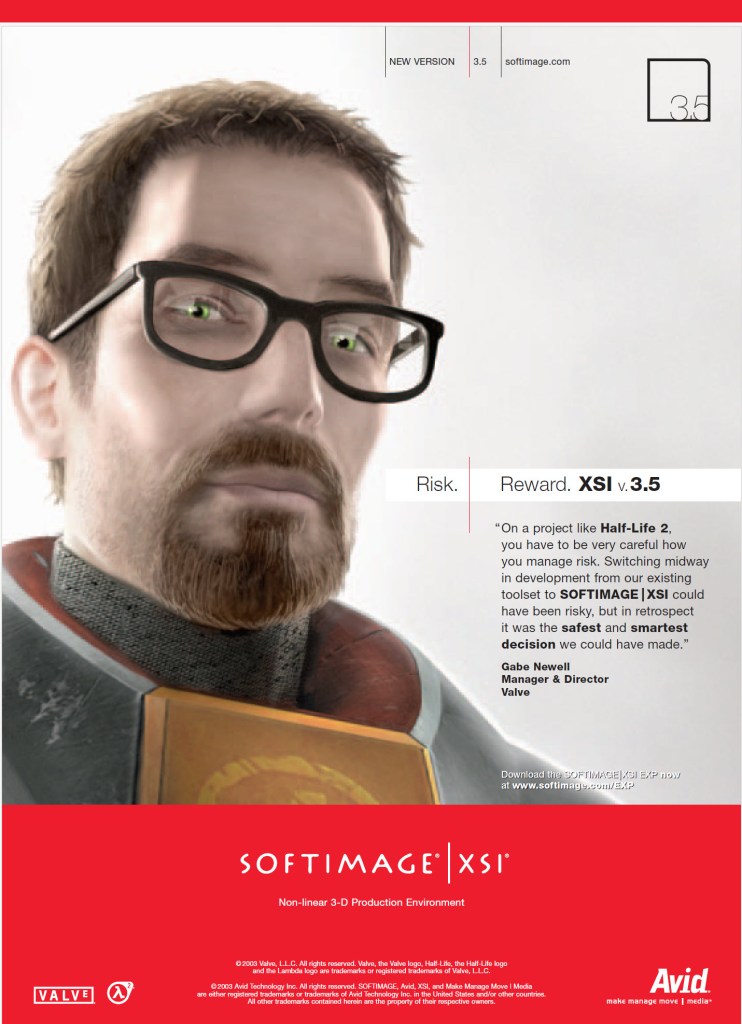

Risk. Reward. XSI v.3.5

NEW VERSION 3.5 softimage.com

“On a project like Half-Life 2, you have to be very careful how

you manage risk. Switching midway in development from our existing

toolset to SOFTIMAGE | XSI could have been risky, but in retrospect

it was the safest and smartest decision we could have made.”

Gabe Newell

Manager & Director

Valve

SIGGRAPH 2000 in New Orleans…

By Bill Desowitz | Monday, July 28, 2003 at 12:00am

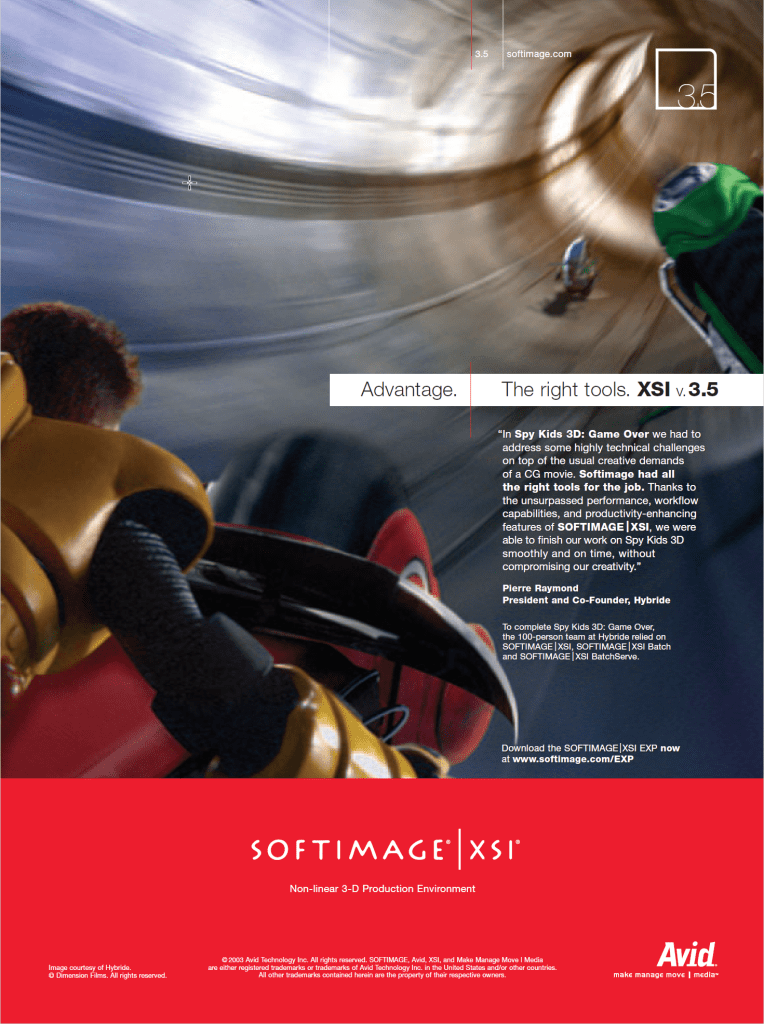

Hybride used a range of Softimage systems to create animation and effects for the box-office topper SPY KIDS 3D: GAME OVER. The 100-person team at Hybride relied on the SOFTIMAGE|XSI product, SOFTIMAGE|XSI Batch and SOFTIMAGE|XSI BatchServe.

Compared with SPY KIDS 2, SPY KIDS 3D includes more extensive and sophisticated effects shots, said Hybride president and co-founder Pierre Raymond. Also, with large sections of the movie being stereoscopic — which will require the audience to wear 3D glasses — we had to address some highly-technical challenges and doubling of rendering requirements, on top of the usual creative demands of a CG movie. Softimage had all the right tools for the job. Thanks to the unsurpassed performance, workflow capabilities and productivity-enhancing features of SOFTIMAGE|XSI, we were able to finish our work on SPY KIDS 3D smoothly and on time, without compromising our creativity.

The film takes the kids into a virtual-reality video game. Once the characters enter the video game the audience puts on 3D glasses. From the design and animation of the CG versions of Grandpa and robot characters to the creation of more than 15 different motor vehicles and dozens of virtual environments, every element had to be rendered twice to create the illusion of a 3D environment. Hybride built a powerful 200-CPU render farm based on the SOFTIMAGE|XSI Batch system, managed by the SOFTIMAGE|XSI BatchServe software.

To create a depth of field perceptible with special 3D glasses, we used the amazingly flexible render tree in SOFTIMAGE|XSI to render each shot twice, the first time as if it were seen from the left eye, and the second time as if it were seen from the right eye and then interleaved the two shots, explains Marc Bourbonnais, senior technical director at Hybride. One of the other unusual aspects of the movie is that almost 75% of the live action was shot on a greenscreen, the rest being totally computer generated. In fact, in most shots, the only real elements are the actors! We made extensive use of the FXTree to key in the greenscreen shot actors into the CG scenes so that we could easily change their timing and positioning within these scenes, just as if they had been CG characters themselves.

Softimage Co., a subsidiary of Avid Technology, Inc., is an industry leader in 3D animation, 2D cel animation, compositing and special effects software. Softimage customers include R!OT, Valve, Capcom, Pandemic Studios, The Mill, Pixel Liberation Front, Blue Sky Studios, Hybride, Janimation, Quiet Man, PSYOP, Cinepix and Framestore CFC. For more information about Avid visit www.avid.com.

Hybride is a 10-year-old visual effects company located in Piedmont, Quebec, Canada. Its credits include SPY KIDS 2, ONCE UPON A TIME IN MEXICO, BATTLEFIELD EARTH, THE FACULTY and MIMIC. For more information visit www.hybride.com.

Koei Co., Ltd.

“Dynasty Warriors Online “

ByTakashi Umezawa

Speaking of Koei, it is a company that has been developing historical strategy simulation games such as “Romance of the Three Kingdoms” and “Nobunaga’s Ambition” since the PC98 heyday. While games for consoles are still the mainstream in Japan, in 1998 , the network game “Nobunaga’s Ambition Internet ” for PCs was released. Dynasty Warriors Online (hereafter referred to as Warriors BB ) , which began service on November 1 , 2006 , is Koei’s latest network game. When I heard that XSI ‘s custom display host (hereafter referred to as CDH ) was used in this development , I immediately interviewed the person who was involved in the development.

How is it different from the past Dynasty Warriors series?

“In a nutshell, this game is a multiplayer online action game. There is a town and a battlefield. In the town, you meet various people, form a party with your friends, and head to the action part, the battlefield. Battlefield. The goal is to repeatedly fight against players of other powers and ultimately lead your own army to unify the chaotic world.”

On October 26th , when we interviewed, users can use it between 5:00 pm and 3:00 am as a pre-opening before the official service, but since the interview was in the morning, we used our internal server to show Musou BB . The user first sets the character to use, but there are quite a lot of choices for this, including gender, physique, facial features, hair, skin color, eye color, and even voice. I was surprised by the sheer number of voice choices to personalize the characters you use in town and in combat, and to make them easier to distinguish. It seems that the main character this time is created with 3000 to 3500 triangles. First, I was asked to explain the color of my skin and my eyes.

“The character model in the game allows the user to arbitrarily set the color of the face, hair, and eyes. This uses the diffuse value of the material, but if it is only this, the texture tends to be poor. , the brightness of the specular is automatically adjusted in conjunction with the diffuse value.For example, if the skin is dark and the specular value is the same as that of white skin, the skin will lose its luster. Therefore, when the diffuse is dark, we investigated how bright the specular should be to make it look like human skin on XSI ‘s CDH.We plotted the results, made it into a function, and incorporated it into the actual device to make it look unnatural. I used to highlight the eyes by hand, but this time I used specular to make them look more lively.”

Also, there are various weapons to choose from. The default equipment is normal, but as the game progresses, various equipment can be obtained, and it will actually be reflected on the character on the screen.

At the beginning of the game, you start from your own house, but in this house there are an armory, a clothing box, a writing desk for exchanging e-mails, and a medal box for viewing medals and play history. This house is also small at first, but it gets bigger and bigger as the game progresses. As soon as I stepped out of my house, I was amazed by the beauty of the town, with its meticulous attention to detail. Even the way the surface of the pond sways and reflects is realistically expressed.

This title is the first title to use real-time shaders for the next generation. When asked, he said, “There are no battle scenes in the city, and when many players gather, it gets a little slow, and even if the frames drop, it’s not a big problem to play, but on the battlefield, it’s important to win or lose . In order not to fall into the gap, we are trying various ideas such as reducing the weight of the data.There was also a proposition to challenge a new expression, so we used a specular map and bloom effect to effectively express the brilliance of the light. The character’s shoulder armor is gold, and you can definitely see the diffuse reflection of the light when it hits the sun. “For the light source, I mainly use two parallel lights, and I also use a hemispherical light. I can’t go as far as image-based lighting, but I aimed for something close to that. First, change over time . The weather also changes like snow, cloudy, fog, etc. The impression of winter and summer changes greatly depending on the path of the sun and the amount of light.Also, at the object level, such as trees blooming and withering. The lighting is particularly elaborate, and we put a lot of effort into shading and environmental effects.We also simulated the diffusion of sunlight called light scattering to create a haze-like effect. It also expresses realistic atmospheric conditions.”

I asked if the background used in the townscape was textured or burned into the vertex color.

“Basically, colors are textures. Other than that, we use real-time shadows called shadow maps. It is possible to express that it is hitting the ground or the character.In the background, vertex colors are also used.Even though there are real-time shadows, there are still many parts where subtle shadows are drawn by hand.However, In areas where the texture is tiling, we can’t draw unique shadows, so we use vertex colors for those areas, which we’ll cover later in the modeling section.”

I see, but when I asked if hardware with suitable specs would be necessary to use all of these graphics, he actually opened the setting screen and explained.

“We want as many users as possible to enjoy it, so we have made it possible to set the graphics options for each city and battlefield according to the user’s play environment. Screen resolution, texture resolution, distant view display, model display, lighting. Various settings are possible, such as diffusion (bloom effect), water quality, real-time shadows, etc. Initially, there was a plan to use reflections, normal maps, etc. for the character, but it is possible that many players will be displayed. Due to the special circumstances of online games, it was not possible.However, the background uses relatively rich polygons.The water surface uses a normal map real-time shader.There are various shaders for this water surface. There are various parameters, and I adjusted and checked the color, transparency, reflectance, etc. on the CDH .”

After this, I moved to the battle scene and was able to actually play for about 10 minutes. “The good thing about Musou is that you can enjoy it even with simple controls,” he said. This game is recommended for those who want to enjoy an exhilarating feeling.

You can check the same thing as the actual machine with XSI !

Next, I asked about the use of CDH , and every time I listened to it, I couldn’t help muttering, “It’s wonderful.” That’s how ideal CDH is used.

“I think the most used function of XSI is CDH . This is mainly for modeling, but when you need to make a temporary check while modeling, you can check various things in real time with XSI without outputting to the actual machine. Musou BB is a real machine = PC , but if it’s a title for PLAYSTASION3 (hereafter referred to as PS3 ), you can see it with the same feeling as the real PS3 machine.Koei’s PC , Xbox 360 ( 360 below ), we are creating a graphics library for games that can be expressed in the same way on all PS3.By loading the engine for Windows on XSI ‘s CDH , it will be possible to preview on XSI .that preview, 360 and PS3. In other words, it uses the same engine as the actual machine, so you can check the appearance on the spot. “It’s a simple explanation, but from the designer’s point of view, it’s a really wonderful environment.

“This is the render tree of the material, and it contains the necessary information for use on the actual machine. We convert that scene into actual machine data with our own converter and load it into the actual machine and the game. That conversion The processing itself is also done within CDH , so it looks the same.”

“First of all, we call the standard shader used in Musou BB , the standard shader.” Here, from CDH , open the shader setting screen and change the color of the ambient parameter Or, if you change the color of the diffuse or change it with the slider, it will be reflected in the same result as the real machine.” You can clearly see that the shading in CDH is changed in real time. “This lighting setup itself is the same as the actual machine. By changing the attributes of this material to increase the intensity of the specular, or by changing the color of the specular, the same thing as in the actual machine can be achieved on the XSI . You can see it in the CDH.In addition, by editing in this CDH, the editing results are reflected in the XSI Render Tree . By outputting the edited material while confirming it, the data remains in the XSI properly and there is no need to rework.This is also the basic concept of CDH and how to use it.” What a wonderful, truly ideal CDH is how to use

“Shaders can also express various expressions, and we created them in-house. There are tools for assigning what kind of shader to the material. For example, if you want to do pixel lighting, apply that shader. and reload the model into CDH , and the specular results are done per pixel instead of per vertex, giving smoother results, what shader to use is ruled by the name of the material, STD is standard, PSTD is a per-pixel effect, etc. In addition, if you want to express reflection, you can select a reflection map, and check the reflectance settings that change according to the model. When requesting parameters, information about what parameters are requested is embedded in the shader. Based on that information, the necessary parameters are automatically created on the CDH UI.

The variables used in the shader are called annotations, and they contain the parameter name, description, required values, and UI type.In the case of reflection shaders, this annotation information is stored in the CDH Reads and allows you to edit and check its parameters.Of course, the data remains in XSI.

DxProgram of Render Tree , which normally writes shader codeUser-defined data picked up from annotations is stored in nodes that write real-time shader code such as . Therefore, shader-specific parameters can also be checked and set in CDH and saved in XSI . In the XSI material settings, a value of 1 or more cannot be entered, but this mechanism is used to save a value of 1 or more as a character string. ”

By storing data that cannot be handled within XSI as character strings, the information is preserved in the material tree information, which seems obvious, but is a very effective method .

“The next one uses a slightly more complicated lighting model, which is considered in units of materials. For example, if you apply a material called copper, you can check the reflection model of copper inside the CDH . Is this using the BRDF idea? “Yes, that’s right. Of course, the result of editing here is embedded in the XSI node. This copper uses a normal map, and the specular appearance changes according to the normal map. And the result is also reflected in the XSI tree.You can check it like this.Recently, not only that, but I think there are shadow maps and post effects on the actual machine . However, it is better to check it with CDH, and it may be the final output only after including such things.Since it is as close to the actual machine as possible, shadows and post effects can be expressed here . We are currently working on developing it, and we are now able to check things like blare effects and depth of field effects within the CDH .”

“Also, lighting effects can be saved as parameters. At this stage, it is a function that will be used in the test function, but lighting and camera information can be saved as text. Lighting types can be parallel light sources, It is possible to output what point light source is used, color, camera position, and read the settings.For example, by having the environment set on the actual machine side written out in a specified format, This CDH reads a setting file that describes how a character will look in a lighting environment in a certain background, making it possible to reproduce the same environment as the actual machine.”

I have no words but to say it’s really great. Designers are lucky to be able to work in such an environment. Few companies can afford to actually develop such a workflow part. It can only be said that it is the result of the efforts of the developers. However, the person who developed it modestly said, “No, rather than saying that I did my best, I just collected the source code from the people who actually made the engine and converter and put it on CDH . ” A designer who used CDH said:

“Musou BB was the first in-house title that used a newly developed shader, so at first I was fumbling around to find out what kind of parameters to give to the shader to create an effective and pleasing “like” texture. It was work, and this CDH function was very useful there as well. We designers moved the sliders on CDH ‘s real-time preview to determine how much value we should give to make it look like it . It contributes greatly to improving the quality of the model. ”

character modeling

Next, I asked the person in charge of character modeling how XSI was used in this project.

“Here is the custom script toolbar palette that the character team uses . Such toolbars can be created almost automatically by dragging and dropping, such as settings.” “If it’s a simple script-level tool, designers often create it.” The person in charge of characters continued, ” It’s very useful for creating character models.What’s written inside is a simple scripted command history of frequently used functions.Thanks to this, you can work very efficiently.Also, edge The function that allows you to select all at once is very useful for editing hair, etc., and I often use Deform ‘s Smooth function when I want to add specular beautifully when making skin.Even with just the default function, XSI is very After that, it’s Synoptic View.This is what I use when creating images of character poses for publicity and when setting facial animations.With this, animators can It is very convenient because even people other than us can easily create poses.”

Background is User Normal Editing tool and Ambient Occlusion ( ABO )

“The background is the same as the character team, and it was very helpful that the edge loop selection and modeling functions were enhanced,” the person in charge of the background began to explain. “There are a lot of characters in this game, so I thought about what I could do to make them look richer than they could have been. In particular, I use the User Normal Editing tool that is included in XSI.For example, in an easy-to-understand example, this is a tree model, but the left is the default model.The number of polygons is small, and one side The shading of the model in the middle is not beautiful because it is drawn.It was a common technique until now to forcibly turn the normal of the model in the middle upward to make it brighter, but look at the model on the right. As you can see, thanks to User Normal Editing , I was able to edit the normals as I wanted. Then, I would read the adjustments into CDH and check them as I worked.”

“One more thing, in the background team, the number of polygons is not rich, so we baked shadows into vertex colors. We also used self-shadowing, but when that didn’t work, we used vertex colors. Until now. I used to do that work almost manually, but this time I use XSI ‘s render vertex function, so I can easily bake ABO.Since there are multiple background objects, I’m using it. At the time, I was worried about which side to attach the light to . You can bake shadows that can be handled from.In other words, you can set the vertex color for shadows that can be safely tolerated from any side with a single setting button.This model is baked ABO with the default settings It’s just that, but even with this, it’s quite ready for use in an actual project. After this, we’ll make some adjustments, but there are models that don’t need that, so the work speed has increased and it’s really convenient.”

Indeed, if you look at the model actually used in Musou BB, you can see that the shadows are well cast.

Scripting

Finally, he talked about the usefulness of scripting in XSI.

“After all, the script is relatively easy to understand even for us designers. It can be used in an action-like manner by simply listing commands, or batch processing such as converting multiple databases at once. Using a simple VBS , multiple I also use it quite often to repeat the same process for the model of WARRIORS BB.An example of using it for Musou BB is the fly animation of the camera before the battle stage.For example, a simple slowdown from A to B Even with animation, it takes time and effort to do it programmatically, but this time, it was very easy because I could use the motion data output from XSI as it is.The background coordinate data picked up by the designer was converted into a CSV file . , read it into XSI with a script, create an animation with adjusted function curves with a single button, and automatically convert the data.In addition to this, the script is an idea It can be used very conveniently depending on the ingenuity, so I always use it.”

One of the challenges for programmers is how to provide an efficient environment where designers can work easily. CDH can access custom tools directly from within XSI rather than launching external tools , and can seamlessly integrate information with XSI . In other words, the original tool created by the programmer can be used by the designer as if it were a part of the XSI function without any sense of incongruity. Designers can also handle any task by combining XSI’s excellent modeling and scripting functions, greatly improving work efficiency.

SOFTIMAGE|XSI is powerful software that brings great benefits to both programmers and designers to create the best environment for next-generation game development .

March 1997

Sumatra is more than an evolutionary upgrade..it is a bold new initiative that aims for nothing short of a revolution

To Softimage Inc., computer animation is about much more than the ability to describe intricate shapes, simulate physical events, or create walking characters automatically. It’s about the artist and about how the artist creates. Softimage® 3D has been and always will be an artist’s tool – conceived by artists, designed for artists, and evolved under the direction of artists.

Over the past 10 years, Softimage 3D has revolutionized the 3-D animation industry, with the first artist-centric user interface, an advanced tool set designed for the way artists work, and a production-centric focus that seamlessly fits into real-world production environments. Today, Softimage 3D is an essential tool for creation and production of serious works of animation.

This paper provides perspective on the past decade in computer animation, discusses some of the challenges facing the industry today, and outlines a road map for how Softimage will drive innovation for the next 10 years.

The ability to use computers to create stunning visual effects and, perhaps more importantly, believable characters, quickly caught the imagination of animation artists 10 years ago. Computer animation now has completely captured the hearts and minds of audiences around the world. Softimage is proud of its role in the computer animation industry, particularly the accomplishments below.

The 3-D computer animation industry has reached one of the most pivotal times in its short history. Whereas productions were once typically created in “garage shops,” larger workgroups are becoming the more common entity. These workgroups include many types of creators – directors, producers, musicians, audio engineers, editors, marketers and, of course, animators – all working collaboratively on productions. With this shift in the typical production model and major changes, the challenges of 3-D computer animation have changed greatly. These new challenges cannot be addressed simply by adding more hardware and features to animation systems: It is time for a fundamental paradigm shift in the way we approach the creative process.

For example, today’s productions require extremely rapid development. However, the same methodology introduced in the first 3-D computer animation products is still being used today. Modeling, animation and rendering features and functions have advanced tremendously, but they are still very linear processes. Aside from the creative restraints this imposes, it also requires animators to spend a tremendous amount of time moving between such tasks as modeling an object, adding materials and textures, preview rendering, final rendering, and adjusting lights, often only to repeat the process because some element was missed. This can easily happen thousands of times in a single production; reducing that number is imperative to help animators meet today’s production schedules.

Animation is not well-integrated into the production process. Once an animation is complete, it’s extremely difficult and costly to make revisions based on other elements in a production. For example, adding one second to the beginning and end of an animation to match a live-actor scene is extremely difficult. Synching sound and animation is challenging because no system available today provides a truly integrated time line for different media. And within the editing environment, where the make-or-break finishing touches are added, almost no controls are available over aspects such as lighting and materials for animation. Integrating animation into the overall production process is crucial to making it a more easily introduced element and enabling the next creative leap in computer animation.

The current vision at Softimage is a direct extension of what it has been pursuing over the last 10 years, but the vision is bold and more encompassing, extending to the entire production process. The goals are to provide the ultimate creative, collaborative environment for 3-D animators and to extend well beyond that into the entire digital-content creation process. For Softimage, all digital production is inextricably linked, and only through the integration of all elements, including powerful 3-D animation capabilities, can production reach its full creative potential and efficiency. Over the next decade, Softimage will continue its legacy of artistic innovation and will begin a new one: spearheading groundbreaking new ways to create digital content.

The tool Softimage is building to realize this vision, code-named Digital Studio, is a comprehensive non-linear editing environment that encompasses all aspects of digital media production including nonliner editing(NLE), audio, compositing, titling, paint and, of course, 3-D animation. Because all media in this environment are linked by a single time line, each element can be created, edited, re-edited and synchronized in real time.

To fulfill the vision, Softimage is creating a next-generation animation system to be both integrated into Digital Studio and fully functional as a standalone system.

Sumatra, the code name for Softimage’s new 3-D animation product, will be the world’s first collaborative nonlinear animation system. More than a simple upgrade, Sumatra encompasses the rich features of Softimage 3D and builds on a legacy of speed, integration and productivity earned from a decade of experience in the most demanding production environments. With Sumatra, 3-D animation is fully integrated for the first time into NLE, compositing, audio and other production elements.

Sharing the same architecture as Digital Studio, Sumatra is a fully multithreaded, cross-platform application capable of fully exploiting the power of Windows NT and Silicon Graphics workstations, as well as multiprocessing servers. A highly optimized operator structure combined with a powerful execution engine ensures that all functions work together seamlessly and can be combined in a wide variety of configurations. The real-time heritage of this architecture guarantees the fastest refresh for viewing and rendering. Artists have full control over element update, including level-of-detail and refresh ordering.

Sumatra will provide the most open platform in the history of the computer animation industry. Softimage developers are using the same environment that will be available to customers and software partners to create features for Sumatra. Third party plug-ins will have the same power as the native tool set, with complete control over data and user-interface parameters.

Softimage is the industry-acknowledged leader in interface design for artists, in large part because of its fundamental philosophy that tools and features are only as powerful as the creative workflow allows them to be. Sumatra’s transparent interface provides two-click access to all major tools, a work-centric design, and direct, modeless object manipulation. Combining these principles with contextual, customizable menus and an innovative design, Sumatra enables individual artists to adapt the interface to their needs and work style.

Sumatra provides intuitive new ways to work graphically with scene data, simplifying the management of multiple scenes and mass character animation. One, two or all characters can be changed with just a few mouse clicks. The overall direction of a crowd can easily be shifted. Artists can go beyond granular animation to high-level character control – from animating one athlete to orchestrating the complex actions of an entire team. It is also possible to set up several tools on the same screen – for example, interactive rendering in one window and modeling in another.

The future of 3-D content creation will be built around multiuser, multilayered animation, for which a common vision, easy access to production data, and project tracking are essential elements for success.

Designed to be a true multiuser, multilayered animation system, Sumatra will shift animation production software design from an individual task to a workgroup-centric process. Sumatra will be the first animation product to allow teams to work efficiently across hardware and data boundaries, enabling the whole to exceed the sum of its parts.

In practice, this collaborative integration allows enables one artist to model a character while another designs the set lighting and another works on facial animation. Advanced project tracking, multiscene and multiobject viewing, and advanced database management will also be central elements in Sumatra, offering significant new support for the model of team-based animation production.

Small incremental steps that make existing processes better won’t change the animation industry, but new ideas that challenge existing norms will. Nonlinear animation is an industry first and a ground-shaking new idea. In nonlinear animation, animation data can be thought of as an image layer rather than a linear process involving a single stream of keyframes. This frees the artist to mix, move, reorganize and change different streams of animation as if they were layers of an image.

As an example, consider the facial animation of a character that requires hundreds of different expressions. In the past, different mouth orientations could be combined to produce the appearance of speech. Eyebrows and eyeballs could also be controlled independently. With Sumatra, the artist will be able to assign a dedicated animation to each facial muscle, assigning one set of muscles to the jaw, and another set to the tongue, nose and eyelids. When the artist is ready to animate the face, each animation stream can be mixed, stretched, reorganized and then “composited” to the global time line by hand or by procedural functions to create countless expressions.

Secondary animation, such as breathing, veins pulsing and random nervous twitching, could be layered on top of the original animation to give even more life to the character. To complete the picture, dynamics could be added to the face to create the appearance of aging.

Because of combinatorial mathematics, nonlinear animation enables the artist to breathe more life into a character with fewer animation sequences. It’s a simpler, highly efficient way to animate characters and objects.

As with preceding generations of Softimage animation products, Sumatra will deliver the industry’s most artist-oriented animation tools. Softimage will once again change the art of taming the science of motion.

Moving beyond today’s explicit definition of movement towards the creation of characters that can react to their environment, Sumatra characters will be able to take action seemingly of their own volition, responding to environments in ways defined by artists. Actions can be combined with customized transitions, and character behaviors can be triggered by events.

Powerful new animation control tools in Sumatra make it much easier to orchestrate complex movements among multiple characters or objects in a scene, animating a football team as easily as a quarterback, for example. In Sumatra, artists can preset values for a localized animation system and allow universal dynamics to drive the animation across a given number of keyframes, evaluating collisions and generating animation on the fly. The fully integrated particle system in Sumatra provides yet-another layer of reality, allowing artists to treat particles as naturally as they treat any other kind of primitive.

Fast, interactive modeling tools in Sumatra instill geometry with a complete knowledge of how they were built, freeing the artist to experiment with form and shape. The Sumatra modeler adapts equally well to ground-up model creation and resolution-targeted model manipulation.

Pushing the bounds of traditional model manipulation, the Sumatra modeler adds direct manipulation techniques to the classical approaches in Softimage 3D, so that artists can modify all aspects of an object directly and intuitively. Powerful data types incorporated as base classes in the Sumatra architecture provide for complete parity of tools between mesh and parametric surfaces, freeing the artist from remembering what tools work on which objects.

With Sumatra, levels of control and detail are completely in the hands of the artist. The artist can model a character’s head with a bezier spline control, the body with cardinal control, and the legs with nonuniform rational B-spline (NURBS). Blend the entire character together with fully relational blended surfaces, blend on blends, then animate the entire piece. Sumatra also includes a full suite of tools developed for the specific needs of game developers.

Sumatra incorporates the next generation of the Mental RayTM renderer as a completely integrated system. Sumatra offers truly interactive render control, allowing the user to interactively preview a part of a scene or the whole scene. In combination with attribute-space editing tools that provide precise control over texturing, Sumatra eliminates the traditional wall between geometry, texture and rendered output.

Sumatra also provides a sophisticated renderfarm manager for parallel and distributed processing. With multiple hardware platform compatibility, you can assemble a highly optimized and cost-effective solution for the most demanding rendering tasks. – so you can distribute what you want, where you want it, and allow the tracer to determine how to get maximum speed from each workstation.

The road to Sumatra will be gradual and evolutionary. The product itself is revolutionary.

First, rather than releasing all major components at once, many Sumatra-class tools will first appear as Softimage 3D plug-ins on both Windows NT and IRIX platforms, providing next-generation prototyping, modeling, animation and rendering tools for use in today’s Softimage 3D environment. Sumatra functionality such as surface blending will be incorporated into the current architecture of Softimage 3D. Custom applications based on the Softimage Software Development Kit will work on both Softimage 3D V3.7 and Sumatra, preserving existing investments of time, resources and ideas. Later this year, Softimage will also release a new standalone version of Mental Ray that will provide highly interactive rendering, with material editing, texturing and more. This new renderer will be integrated into the first version of Sumatra.

Second, forward compatibility will be maintained for all customer assets. Data models, animation files, rendering scenes and shaders from Softimage 3D will transfer seamlessly to Sumatra, again protecting existing investments.

Third, all current Softimage 3D features have a functional equivalent in Sumatra, maintaining a continuity that is vital for the immediate application of this next-generation system to current production needs.

Sumatra is more than an evolutionary upgrade of existing products and the current production paradigm. It is a bold new initiative that aims for nothing short of a revolution in the way that 3-D digital media is created and produced. Sumatra embodies a unique and exciting vision in the 3-D animation industry, a vision that embraces both the artists and their world. At no other time in the history of computer animation has Softimage seen a greater opportunity to move artists and the industry to new levels of efficiency and creativity.

Building on the essential principles of artist-oriented workflow and advanced animation control, Softimage will continue its legacy as the leader in 3-D computer animation.

Important notice:

The information contained in this document represents the current view of Softimage Inc. on the issues discussed as of the date of publication. Because Softimage must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Softimage, and Softimage cannot guarantee the accuracy of any information presented after the date of publication.

This document is for informational purposes only. SOFTIMAGE MAKES NO WARRANTIES, EXPRESS OR IMPLIED, IN THIS DOCUMENT.

SOFTIMAGE* is a registered trademark of Softimage Inc., a wholly owned subsidiary of Microsoft Corporation, in the United States, Canada and/or other countries.

Microsoft*, and Windows NT* are registered trademarks of Microsoft Corporation in the United States and/or other countries.

All other trademarks belong to their respective owners and are hereby acknowledged.

This document is protected under copyright law. The contents of this document may not be copied or duplicated in any form, in whole or in part, without the express written permission of Microsoft Corporation.